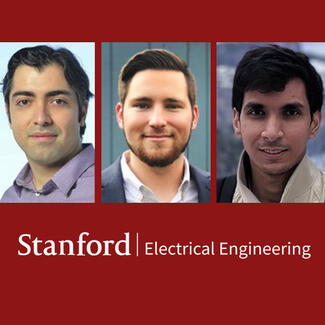

Amin Arbabian, Aidan Fitzpatrick and Ajay Singhvi (PhD candidates) develop aerial based remote underwater sonar imaging

Their paper is Highlighted in Nature: Airborne sonar spies on what lies beneath the waves.

In their recent paper, Professor Amin Arbabian and PhD candidates Aidan Fitzpatrick and Ajay Singhvi describe an aerial system that can image objects submerged in still or moving water. This marks an important milestone in the development of robust, remote underwater sensing systems.

Accelerated climate change has left humanity at a crucial inflection point in our history, with urgent calls for enhanced environmental monitoring and action. Oceans play a critical role in our ecosystem—they regulate weather and global temperature, serve as the largest carbon sink and the greatest source of oxygen6,7. Despite that, greater than 80% of the ocean remains unobserved and unmapped today8. Thus, it is imperative that we develop means to reliably and frequently sense the rapidly changing ocean biosphere at a large-scale9. Remote sensing of the ocean ecosystem also has high-impact applications in various other spheres: disaster response, biological survey, archaeology, wreckage searching, among others.

Sonar is a mature technology that offers impressive high-resolution imaging of underwater environments; however, its performance remains fundamentally constrained by the carrying vehicle. Typically, sonar systems are mounted to or towed by a ship that traverses an area of interest which limits frequent measurements and spatial coverage to a fraction of global waters. A paradigm shift in how we sense underwater environments is needed to bridge this large technological gap. Radar, lidar, and photographic imaging systems have enabled frequent, full-coverage measurements of the entire earth’s landscapes, providing above-ground information on a global scale. Likewise, there is a great push to develop remote underwater imaging systems which could have a similar transformative effect in imaging and mapping underwater environments.

Today, airborne lidar is the primary imaging modality used for imaging underwater from aerial systems. These lidar systems exploit blue-green lasers which in clear waters are capable of penetrating as deep as 50 m. Unfortunately, most water is not clear, particularly coastal waters, which have high levels of turbidity and can restrict the light penetration to less than 1 m, making lidars unsuitable for use in a large proportion of underwater environments. To exploit the advantages of in-water sonar, while operating aerially, some researchers have explored approaches that use laser Doppler vibrometers (LDVs) to detect acoustic echoes from underwater targets. However, these optical detection methods lack robustness in uncontrolled environments and therefore previous demonstrations have been severely limited. The presence of ocean surface waves has proven to be a major bottleneck for real-world deployment of such remote underwater imaging systems and is thus a key challenge that needs to be overcome before ubiquitous remote underwater sensing becomes a reality.

To tackle the limitations of existing technologies, we introduce a photoacoustic airborne sonar system (PASS) that leverages the ideal properties of electromagnetic imaging in air and sonar imaging in water. In our previous work, we presented the concept of PASS and demonstrated preliminary two-dimensional (2D) imaging results in hydrostatic conditions. The primary focus of this work is to investigate and overcome the aforementioned fundamental challenge of a hydrodynamic water surface on remote underwater imaging systems such as PASS, thereby opening the door to future deployment in realistic, uncontrolled environments.

Excerpted from their paper, “Multi-modal sensor fusion towards three-dimensional airborne sonar imaging in hydrodynamic conditions,” published in Nature.

Additional author is Roshan P. Mathews, Department of Electrical Engineering, Indian Institute of Technology.