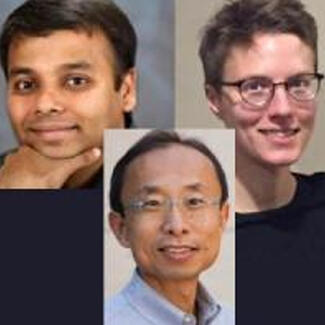

Subhasish Mitra, H.S. Philip Wong, and Mary Wootters' system can run AI tasks faster and with less energy

New algorithms combine several energy-efficient hybrid chips to create the illusion of one mega–AI chip.

Professors Subhasish Mitra, H.S. Philip Wong, Mary Wootters, and their students recently published "Illusion of large on-chip memory by networked computing chips for neural network inference", in Nature.

Smartwatches and other battery-powered electronics would be even smarter if they could run AI algorithms. But efforts to build AI-capable chips for mobile devices have so far hit a wall – the so-called "memory wall" that separates data processing and memory chips that must work together to meet the massive and continually growing computational demands imposed by AI.

"Transactions between processors and memory can consume 95 percent of the energy needed to do machine learning and AI, and that severely limits battery life," said Professor Subhasish Mitra.

The team has designed a system that can run AI tasks faster, and with less energy, by harnessing eight hybrid chips, each with its own data processor built right next to its own memory storage.

This paper builds on their prior development of a new memory technology, called RRAM, that stores data even when power is switched off – like flash memory – only faster and more energy efficiently. Their RRAM advance enabled the Stanford researchers to develop an earlier generation of hybrid chips that worked alone. Their latest design incorporates a critical new element: algorithms that meld the eight, separate hybrid chips into one energy-efficient AI-processing engine.

Additional authors are Robert M. Radway, Andrew Bartolo, Paul C. Jolly, Zainab F. Khan, Binh Q. Le, Pulkit Tandon, Tony F. Wu, Yunfeng Xin, Elisa Vianello, Pascal Vivet, Etienne Nowak, Mohamed M. Sabry Aly, and Edith Beigne.

[Excerpted from "Stanford researchers combine processors and memory on multiple hybrid chips to run AI on battery-powered smart devices"]